Video

Drumbrush

interface with AI to create digital paintings by playing the drums

.pdf, 90MB

The aim of Drumbrush (formerly Drum.ai) is to create an accessible system which allows drummers with no technical background to iteratively interact with supervised learning models to produce rich, varied, and pleasant digital paintings. Drumbrush builds on the work done in creative machine learning in music, as well as posthumanist design to provide an extremely usable and iterative way for drummers to interact with sensors and machine learning. The output visuals simulate the aesthetic of paint (particularly watercolor), drawing a visual parallel from the linguistic comparison of brush- and drumstrokes.

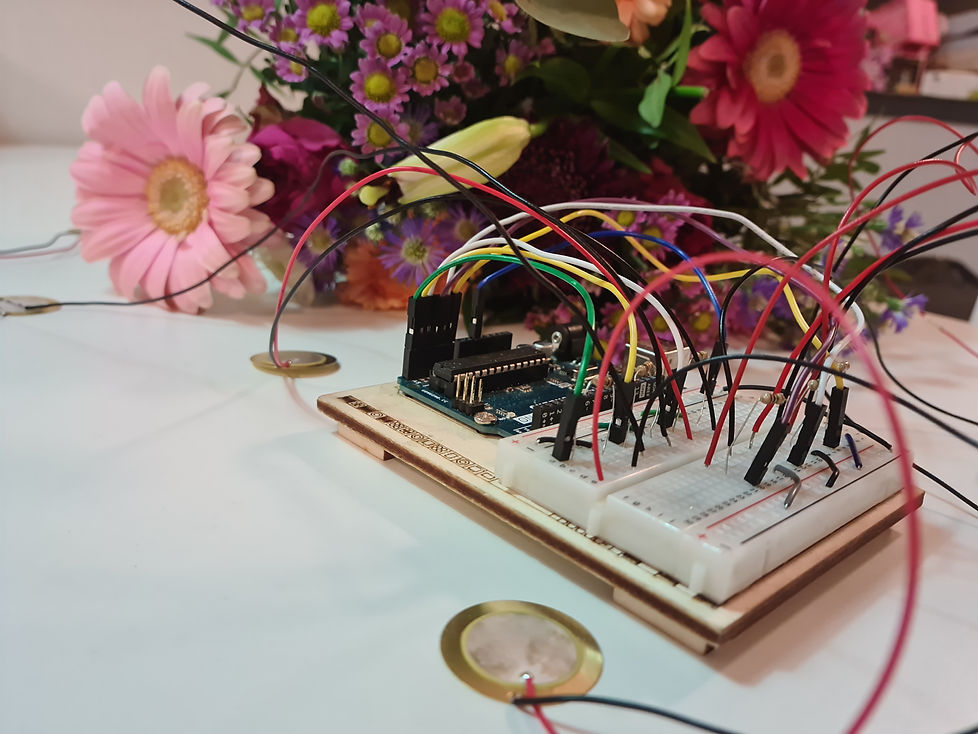

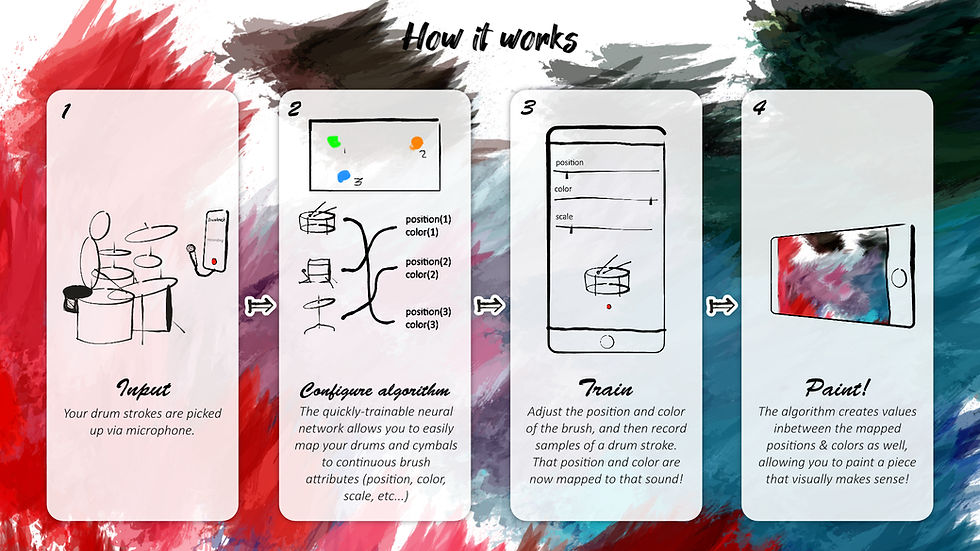

The system consists of 6 piezo sensors and a microphone, which pick up drumstrokes for input. These signals are sent to Wekinator, a general-purpose tool for interactive supervised learning in music and real-time domains, built by Rebecca Fiebrink, an AI & music researcher. The signals are interpreted by a regressional neural network model (6 inputs, 5 outputs), to output continuous values (red, green, blue, x-position, y-position) that change in real-time based on the input signals. These values are sent to a Processing 3 sketch, which applies them to control the color and position of a digital paintbrush (though it can control other attributes of the brush if desired).

In accordance to my vision for a career in UI/UX design, Drumbrush also includes a UI/UX mockup for a mobile application using Figma. This aims to further develop the concept of usability for machine learning with creatives, by imagining an idealized version of Drumbrush in a smartphone format, which situates itself as a replacement for the mobile sound recording app (such as voice memos) that is more suitable for musicians.

DEmoday media

Helping a visitor train their own model